ChatGPT Search and Perplexity: Initial Experience

So earlier in the semester, Perplexity offered a month free trial for their search engine and their LLM offering for students. It was actually a trial to help my college students reaching out to 500 registered students at our university so that each one can get a free year of Perplexity later if they register with their university email.

Now that OpenAI launched ChatGPT Search, I pay for ChatGPT Plus for a year now because I use it for my research as well as using it for quick searches any way and also because I want to have access to the latest features. So I thought I’ll try it out and see how it compares to Perplexity.

I work mainly now on inference engine for the LHCb HLT1 trigger so lets ask a realistic question for something that I can find my self searching for in a working session. I will try to see and compare the quality of answers, the relevance of the references and the overall UI experience. I have to admit that I don’t like Perplexity UI at all. It is not user-friendly and it is not easy to navigate. I feel like the structure of information presented is overwhelming and not well organized. But let’s try to keep an open mind and see how it goes.

I searched for What is the CuDNN method that implements the softmax activation function that can be used in inference engine implementation?

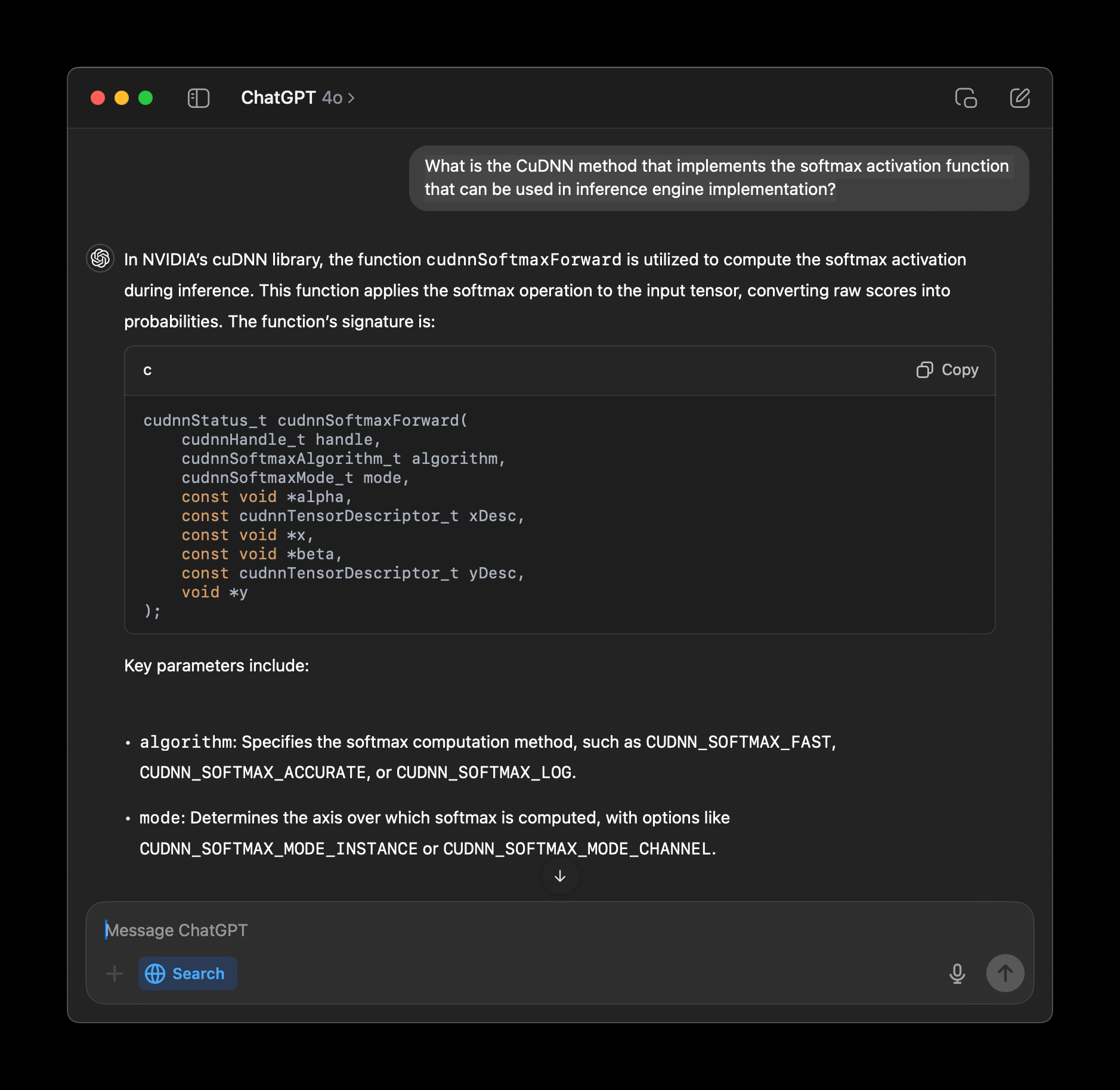

I got the following results from ChatGPT Search:

The screenshot is missing the last part which points out to the source of the answer which is the CuDNN documentation.

It is specifically adding (beside many sources)

For detailed information, refer to the cuDNN API documentation. (NVIDIA Documentation)

So first the answer is correct, the references are many and most of them are relevant. The answer is verbatim from the CuDNN documentation. The example code presented is the same as in the docs

cudnnStatus_t cudnnSoftmaxForward(

cudnnHandle_t handle,

cudnnSoftmaxAlgorithm_t algorithm,

cudnnSoftmaxMode_t mode,

const void *alpha,

const cudnnTensorDescriptor_t xDesc,

const void *x,

const void *beta,

const cudnnTensorDescriptor_t yDesc,

void *y)

So at the first glance it looks good. But let’s see how it compares to Perplexity.

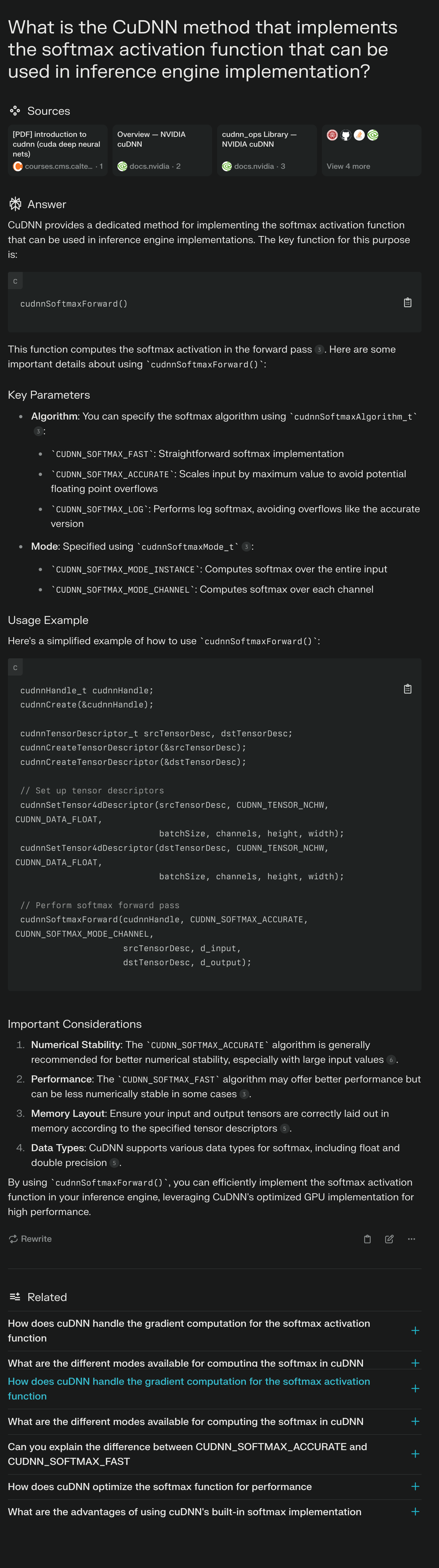

I got the following results from Perplexity:

To be honest, the way the information is presented here looks better and more organized and detailed on how to use the CuDNN softmax function. It’s also suggesting an example on how it can be used in real example that we need to construct tensor descriptors in inference engine. It is more detailed that ChatGPT Search and giving you some caveats which is actually important, I spent two days tracking a numerical problem with my inference engine that I built a custom softmax function that didn’t have numerical stability.

Also, I don’t know what to think of the suggested questions at the end, it may be good if I’m exploring a topic but if I wanted something specific and get back to work it is not and catching my attention and focus and I don’t need to be distracted by it. I’m not sure if there is a way to manually turn it off and on so it depends on what I want to do now. Sometimes I would like to have it on, and sometimes I would like to have it off.

Now let’s ask generic questions and see how they both perform. I wanted to ask about something I already know.

I searched for What are the 5 big cities in Egypt in terms of population?

Well I lived in Egypt for more than 22 years and definitely know the answer to this question. Cairo, Alexandria, Giza, Port Said and Suez. If they will be answering with reference to what I asked (cities and not governorates). If they went after governorates then it will be Cairo, Giza, Sharkia, Dakahlia, and Behera`. If they do anything else then it will be indication that they probably are mixing sources and not really answering the question correctly.

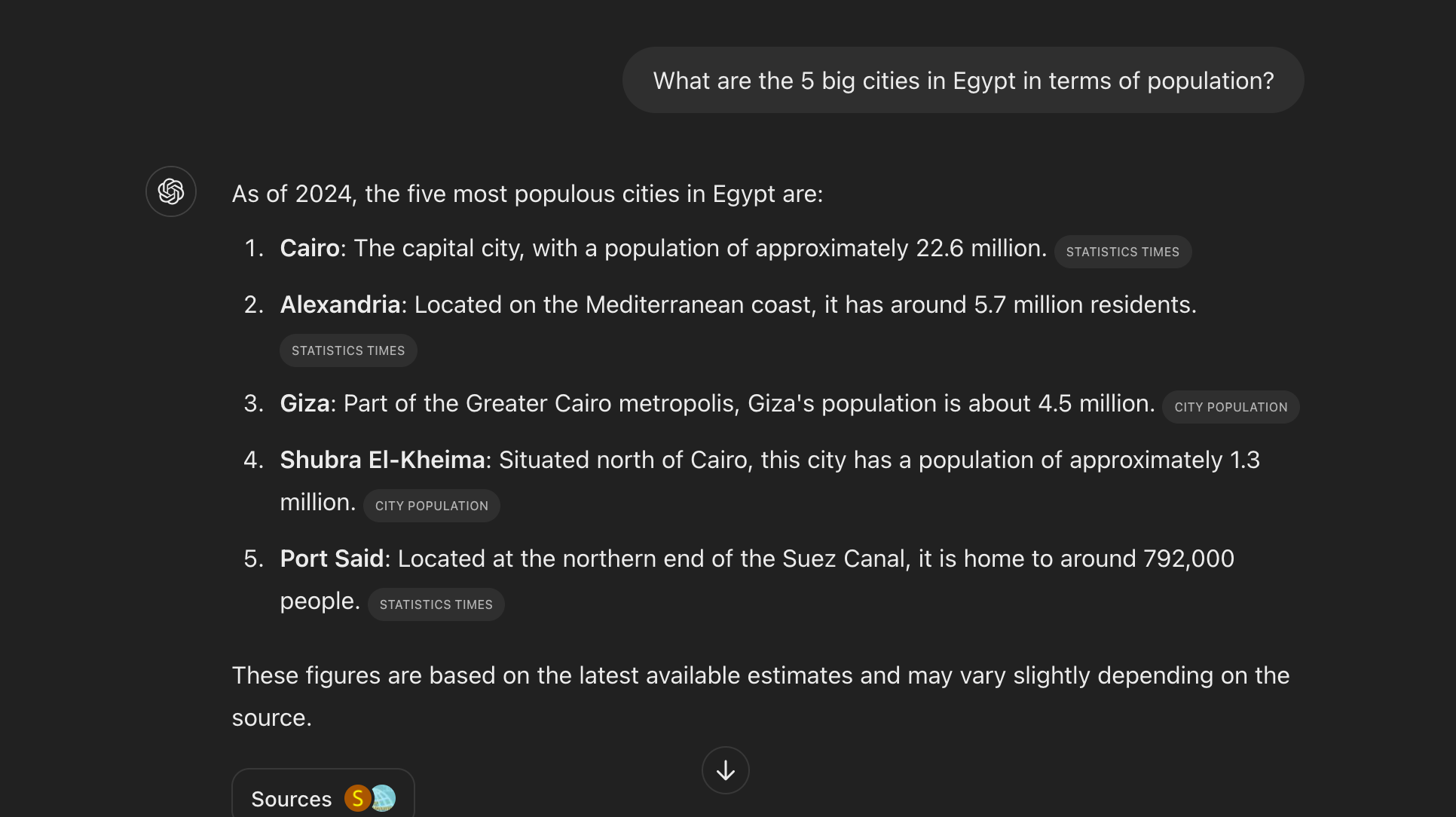

This is ChatGPT Search answer:

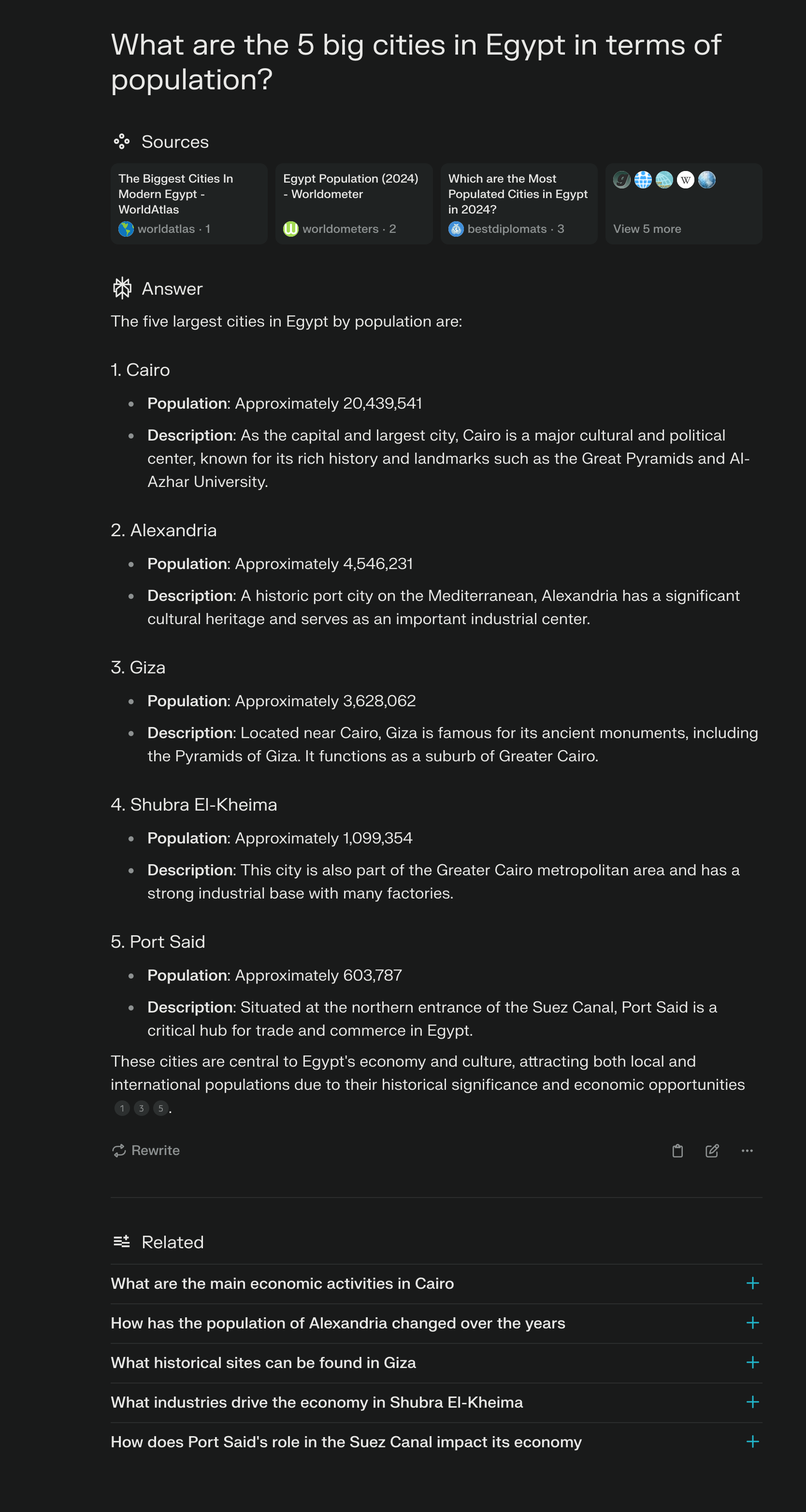

and this is Perplexity:

Well so they both agree that Shubra Al-Kheima is the 4th biggest city in Egypt. Well that’s probably confusing sources that will add a lot of population of smaller towns to Shubra Al-Kheima. But the other 4 will be Cairo, Alexandria, Giza and Port Said. Which is correct. But both are using a wrong numbers that adds the population of Cairo metropolitan area (which include Giza and Shubra Al-Kheima and more) to the population of Cairo.

UI Experience

Let’s now talk about the UI experience. I liked the bolding headings of ChatGPT search and how concise it is. It is easy to read and navigate. I also liked the references at the end of the answer. Also, I felt ChatGPT search is faster than Perplexity. I don’t know if it is because I’m Perplexity free tier user or not. But I think it is because it is faster.

I liked the inline citation in Perplexity. For an academic like me this is how I like to see the references. That’s in contrast of ChatGPT search where it is not clear if the reference is a citation or not. It just feels like it is scrubbing the references and the end. To be honest I think Perplexity references UI model is the way it should be done and this is why I think it is better in its current form than ChatGPT search. I don’t trust LLM with the information and I have to check the source/s of any answers. This is the sample mental model I have even with normal search engines (I don’t copy and paste Stack Overflow answers).

I think it takes more time to provide better references and the more UI elements in Perplexity. Which is probably the reason I feel ChatGPT search is faster.